On 21 January, I attended the launch webinar of DEFI (the Digital Education Futures Initiative), an initiative of the University of Cambridge, which seeks to work ‘with partners in industry, policy and practice to explore the field of possibilities that digital technology opens up for education’. The opening keynote speaker was Andrea Schleicher, head of education at the OECD. The OECD’s vision of the future of education is outlined in Schleicher’s book, ‘World Class: How to Build a 21st-Century School System’, freely available from the OECD, but his presentation for DEFI offers a relatively short summary. A recording is available here, and this post will take a closer look at some of the things he had to say.

Schleicher is a statistician and the coordinator of the OECD’s PISA programme. Along with other international organisations, such as the World Economic Forum and the World Bank (see my post here), the OECD promotes the global economization and corporatization of education, ‘based on the [human capital] view that developing work skills is the primary purpose of schooling’ (Spring, 2015: 14). In other words, the main proper function of education is seen to be meeting the needs of global corporate interests. In the early days of the COVID-19 pandemic, with the impact of school closures becoming very visible, Schleicher expressed concern about the disruption to human capital development, but thought it was ‘a great moment’: ‘the current wave of school closures offers an opportunity for experimentation and for envisioning new models of education’. Every cloud has a silver lining, and the pandemic has been a godsend for private companies selling digital learning (see my post about this here) and for those who want to reimagine education in a more corporate way.

Schleicher’s presentation for DEFI was a good opportunity to look again at the way in which organisations like the OECD are shaping educational discourse (see my post about the EdTech imaginary and ELT).

He begins by suggesting that, as a result of the development of digital technology (Google, YouTube, etc.) literacy is ‘no longer just about extracting knowledge’. PISA reading scores, he points out, have remained more or less static since 2000, despite the fact that we have invested (globally) more than 15% extra per student in this time. Only 9% of all 15-year-old students in the industrialised world can distinguish between fact and opinion.

To begin with, one might argue about the reliability and validity of the PISA reading scores (Berliner, 2020). One might also argue, as did a collection of 80 education experts in a letter to the Guardian, that the scores themselves are responsible for damaging global education, raising further questions about their validity. One might argue that the increased investment was spent in the wrong way (e.g. on hardware and software, rather than teacher training, for example), because the advice of organisations like OECD has been uncritically followed. And the statistic about critical reading skills is fairly meaningless unless it is compared to comparable metrics over a long time span: there is no reason to believe that susceptibility to fake news is any more of a problem now than it was, say, one hundred years ago. Nor is there any reason to believe that education can solve the fake-news problem (see my post about fake news and critical thinking here). These are more than just quibbles, but the main point that Schleicher is making is that education needs to change.

Schleicher next presents a graph which is designed to show that the amount of time that students spend studying correlates poorly with the amount they learn. His interest is in the (lack of) productivity of educational activities in some contexts. He goes on to argue that there is greater productivity in educational activities when learners have a growth mindset, implying (but not stating) that mindset interventions in schools would lead to a more productive educational environment.

Schleicher appears to confuse what students learn with the things they have learnt that have been measured by PISA. The two are obviously rather different, since PISA is only interested in a relatively small subset of the possible learning outcomes of schooling. His argument for growth mindset interventions hinges on the assumption that such interventions will lead to gains in reading scores. However, his graph demonstrates a correlation between growth mindset and reading scores, not a causal relationship. A causal relationship has not been clearly and empirically demonstrated (see my post about growth mindsets here) and recent work by Carol Dweck and her associates (e.g. Yeager et al., 2016), as well as other researchers (e.g. McPartlan et al, 2020), indicates that the relationship between gains in learning outcomes and mindset interventions is extremely complex.

Schleicher then turns to digitalisation and briefly discusses the positive and negative affordances of technology. He eulogizes platform companies before showing a slide designed to demonstrate that (in the workplace) there is a strong correlation between ICT use and learning. He concludes: ‘the digital world of learning is a hugely empowering world of learning’.

A brief paraphrase of this very disingenuous part of the presentation would be: technology can be good and bad, but I’ll only focus on the former. The discourse appears balanced, but it is anything but.

During the segment, Schleicher argues that technology is empowering, and gives the examples of ‘the most successful companies these days, they’re not created by a big industry, they’re created by a big idea’. This is plainly counterfactual. In the case of Alphabet and Facebook, profits did not follow from a ‘big idea’: the ideas changed as the companies evolved.

Schleicher then sketches a picture of an unpredictable future (pandemics, climate change, AI, cyber wars, etc.) as a way of framing the importance of being open (and resilient) to different futures and how we respond to them. He offers two different kinds of response: maintenance of the status quo, or ‘outsourcing’ of education. The pandemic, he suggests, has made more countries aware that the latter is the way forward.

In his discussion of the maintenance of the status quo, Schleicher talks about the maintenance of educational monopolies. By this, he must be referring to state monopolies on education: this is a favoured way of neoliberals of referring to state-sponsored education. But the extent to which, in 2021 in many OECD countries, the state has any kind of monopoly of education, is very open to debate. Privatization is advancing fast. Even in 2015, the World Education Forum’s ‘Final Report’ wrote that ‘the scale of engagement of nonstate actors at all levels of education is growing and becoming more diversified’. Schleicher goes on to talk about ‘large, bureaucratic school systems’, suggesting that such systems cannot be sufficiently agile, adaptive or responsive. ‘We should ask this question,’ he says, but his own answer to it is totally transparent: ‘changing education can be like moving graveyards’ is the title of the next slide. Education needs to be more like the health sector, he claims, which has been able to develop a COVID vaccine in such a short period of time. We need an education industry that underpins change in the same way as the health industry underpins vaccine development. In case his message isn’t yet clear enough, I’ll spell it out: education needs to be privatized still further.

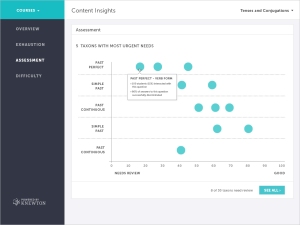

Schleicher then turns to the ways in which he feels that digital technology can enhance learning. These include the use of AR, VR and AI. Technology, he says, can make learning so much more personalized: ‘the computer can study how you study, and then adapt learning so that it is much more granular, so much more adaptive, so much more responsive to your learning style’. He moves on to the field of assessment, again singing the praises of technology in the ways that it can offer new modes of assessment and ‘increase the reliability of machine rating for essays’. Through technology, we can ‘reunite learning and assessment’. Moving on to learning analytics, he briefly mentions privacy issues, before enthusing at greater length about the benefits of analytics.

Learning styles? Really? The reliability of machine scoring of essays? How reliable exactly? Data privacy as an area worth only a passing mention? The use of sensors to measure learners’ responses to learning experiences? Any pretence of balance appears now to have been shed. This is in-your-face sales talk.

Next up is a graph which purports to show the number of teachers in OECD countries who use technology for learners’ project work. This is followed by another graph showing the number of teachers who have participated in face-to-face and online CPD. The point of this is to argue that online CPD needs to become more common.

I couldn’t understand what point he was trying to make with the first graph. For the second, it is surely the quality of the CPD, rather than the channel, that matters.

Schleicher then turns to two further possible responses of education to unpredictable futures: ‘schools as learning hubs’ and ‘learn-as-you-go’. In the latter, digital infrastructure replaces physical infrastructure. Neither is explored in any detail. The main point appears to be that we should consider these possibilities, weighing up as we do so the risks and the opportunities (see slide below).

Useful ways to frame questions about the future of education, no doubt, but Schleicher is operating with a set of assumptions about the purpose of education, which he chooses not to explore. His fundamental assumption – that the primary purpose of education is to develop human capital in and for the global economy – is not one that I would share. However, if you do take that view, then privatization, economization, digitalization and the training of social-emotional competences are all reasonable corollaries, and the big question about the future concerns how to go about this in a more efficient way.

Schleicher’s (and the OECD’s) views are very much in accord with the libertarian values of the right-wing philanthro-capitalist foundations of the United States (the Gates Foundation, the Broad Foundation and so on), funded by Silicon Valley and hedge-fund managers. It is to the US that we can trace the spread and promotion of these ideas, but it is also, perhaps, to the US that we can now turn in search of hope for an alternative educational future. The privatization / disruption / reform movement in the US has stalled in recent years, as it has become clear that it failed to deliver on its promise of improved learning. The resistance to privatized and digitalized education is chronicled in Diane Ravitch’s latest book, ‘Slaying Goliath’ (2020). School closures during the pandemic may have been ‘a great moment’ for Schleicher, but for most of us, they have underscored the importance of face-to-face free public schooling. Now, with the electoral victory of Joe Biden and the appointment of a new US Secretary for Education (still to be confirmed), we are likely to see, for the first time in decades, an education policy that is firmly committed to public schools. The US is by far the largest contributor to the budget of the OECD – more than twice any other nation. Perhaps a rethink of the OECD’s educational policies will soon be in order?

References

Berliner D.C. (2020) The Implications of Understanding That PISA Is Simply Another Standardized Achievement Test. In Fan G., Popkewitz T. (Eds.) Handbook of Education Policy Studies. Springer, Singapore. https://doi.org/10.1007/978-981-13-8343-4_13

McPartlan, P., Solanki, S., Xu, D. & Sato, B. (2020) Testing Basic Assumptions Reveals When (Not) to Expect Mindset and Belonging Interventions to Succeed. AERA Open, 6 (4): 1 – 16 https://journals.sagepub.com/doi/pdf/10.1177/2332858420966994

Ravitch, D. (2020) Slaying Goliath: The Passionate Resistance to Privatization and the Fight to Save America’s Public School. New York: Vintage Books

Schleicher, A. (2018) World Class: How to Build a 21st-Century School System. Paris: OECD Publishing https://www.oecd.org/education/world-class-9789264300002-en.htm

Spring, J. (2015) Globalization of Education 2nd Edition. New York: Routledge

Yeager, D. S., et al. (2016) Using design thinking to improve psychological interventions: The case of the growth mindset during the transition to high school. Journal of Educational Psychology, 108(3), 374–391. https://doi.org/10.1037/edu0000098

Near the start of Mayer-Schönberger and Cukier’s enthusiastic sales pitch (Learning with Big Data: The Future of Education) for the use of big data in education, there is a discussion of Duolingo. They quote Luis von Ahn, the founder of Duolingo, as saying ‘there has been little empirical work on what is the best way to teach a foreign language’. This is so far from the truth as to be laughable. Von Ahn’s comment, along with the Duolingo product itself, is merely indicative of a lack of awareness of the enormous amount of research that has been carried out. But what could the data gleaned from the interactions of millions of users with Duolingo tell us of value? The example that is given is the following. Apparently, ‘in the case of Spanish speakers learning English, it’s common to teach pronouns early on: words like “he,” “she,” and “it”.’ But, Duolingo discovered, ‘the term “it” tends to confuse and create anxiety for Spanish speakers, since the word doesn’t easily translate into their language […] Delaying the introduction of “it” until a few weeks later dramatically improves the number of people who stick with learning English rather than drop out.’ Was von Ahn unaware of the decades of research into language transfer effects? Did von Ahn (who grew up speaking Spanish in Guatemala) need all this data to tell him that English personal pronouns can cause problems for Spanish learners of English? Was von Ahn unaware of the debates concerning the value of teaching isolated words (especially grammar words!)?

Near the start of Mayer-Schönberger and Cukier’s enthusiastic sales pitch (Learning with Big Data: The Future of Education) for the use of big data in education, there is a discussion of Duolingo. They quote Luis von Ahn, the founder of Duolingo, as saying ‘there has been little empirical work on what is the best way to teach a foreign language’. This is so far from the truth as to be laughable. Von Ahn’s comment, along with the Duolingo product itself, is merely indicative of a lack of awareness of the enormous amount of research that has been carried out. But what could the data gleaned from the interactions of millions of users with Duolingo tell us of value? The example that is given is the following. Apparently, ‘in the case of Spanish speakers learning English, it’s common to teach pronouns early on: words like “he,” “she,” and “it”.’ But, Duolingo discovered, ‘the term “it” tends to confuse and create anxiety for Spanish speakers, since the word doesn’t easily translate into their language […] Delaying the introduction of “it” until a few weeks later dramatically improves the number of people who stick with learning English rather than drop out.’ Was von Ahn unaware of the decades of research into language transfer effects? Did von Ahn (who grew up speaking Spanish in Guatemala) need all this data to tell him that English personal pronouns can cause problems for Spanish learners of English? Was von Ahn unaware of the debates concerning the value of teaching isolated words (especially grammar words!)? It’s hype time again. Spurred on, no doubt, by the current spate of books and articles about AIED (artificial intelligence in education), the IATEFL Learning Technologies SIG is organising an online event on the topic in November of this year. Currently, the most visible online references to AI in language learning are related to

It’s hype time again. Spurred on, no doubt, by the current spate of books and articles about AIED (artificial intelligence in education), the IATEFL Learning Technologies SIG is organising an online event on the topic in November of this year. Currently, the most visible online references to AI in language learning are related to  They’re not alone – see, for example, Knowble which I

They’re not alone – see, for example, Knowble which I